9. Approximate Methods for Solving One-Particle Schrödinger Equations#

Up to this point, we’ve focused on systems for which we can solve the Schrödinger equation. Unfortunately, there are very few such systems, and their relevance for real chemical systems is very limited. This motivates the approximate methods for solving the Schrödinger equation. One must be careful, however, if one makes poor assumptions, the results of approximate methods can be very poor. Conversely, with appropriate insight, approximation techniques can be extremely useful.

Expansion in a Basis#

We have seen the eigenvectors of a Hermitian operator are a complete basis, and can be chosen to be orthonormal. We have also seen how a wavefunction can be expanded in a basis,

Note that there is no requirement that the basis set, \(\{\phi_k(x) \}\) be eigenvectors of a Hermitian operator: all that matters is that the basis set is complete. For real problems, of course, one can choose only a finite number of basis functions,

but as the number of basis functions, \(N_{\text{basis}}\), increases, results should become increasingly accurate.

Substituting this expression for the wavefunction into the time-independent Schrödinger equation,

Multiplying on the left by \(\left(\phi_j(x) \right)^*\) and integrating over all space,

At this stage we usually define the Hamiltonian matrix, \(\mathbf{H}\), as the matrix with elements

and the overlap matrix, \(\mathbf{S}\) as the matrix with elements

If the basis is orthonormal, then the overlap matrix is equal to the identity matrix, \(\mathbf{S} = \mathbf{I}\) and its elements are therefore given by the Kronecker delta, \(s_{jk} = \delta_{jk}\).

The Schrödinger equation therefore can be written as a generalized matrix eigenvalue problem:

or, in element-wise notation, as:

In the special case where the basis functions are orthonormal, \(\mathbf{S} = \mathbf{I}\) and this is an ordinary matrix eigenvalue problem,

or, in element-wise notation, as:

Solving the Secular Equation#

In the context of quantum chemistry, the generalized eigenvalue problem

is called the secular equation. To solve the secular equation:

Choose a basis, \(\{|\phi_k\rangle \}\) and a basis-set size, \(N_{\text{basis}}\)

Evaluate the matrix elements of the Hamiltonian and the overlap matrix

Solve the generalized eigenvalue problem

Because of the variational principle, the lowest eigenvalue will always be greater than or equal to the true ground-state energy.

Example for the Particle-in-a-Box#

As an example, consider an electron confined to a box with length 2 Bohr, stretching from \(x=-1\) to \(x=1\). We know that the exact energy of this system is

The exact wavefunctions are easily seen to be

However, for pedagogical purposes, suppose we did not know these answers. We know that the wavefunction will be zero at \(x= \pm1\), so we might hypothesize a basis like:

The overlap matrix elements are

This integral is zero when \(k+j\) is odd. Specifically,

and the Hamiltonian matrix elements are

This integral is also zero when \(k+j\) is odd. Specifically,

import numpy as np

from scipy.linalg import eigh

import matplotlib.pyplot as plt

def compute_energy_ground_state(n_basis):

"""Compute ground state energy by solving the Secular equations."""

# assign S & H to zero matrices

s = np.zeros((n_basis, n_basis))

h = np.zeros((n_basis, n_basis))

# loop over upper-triangular elements & compute S & H elements

for j in range(0, n_basis):

for k in range(j, n_basis):

if (j + k) % 2 == 0:

s[j, k] = s[k, j] = 2 * (1 / (k + j + 5) - 2 / (k + j + 3) + 1 / (k + j + 1))

h[j, k] = h[k, j] = -1 * (((k + 2) * (k + 1)) / (k + j + 3) - ((k + 2) * (k + 1) + k * (k - 1)) / (k + j + 1) + (k**2 - k) / (k + j - 1))

# solve Hc = ESc to get eigenvalues E

e_vals = eigh(h, s, eigvals_only=True)

return e_vals[0]

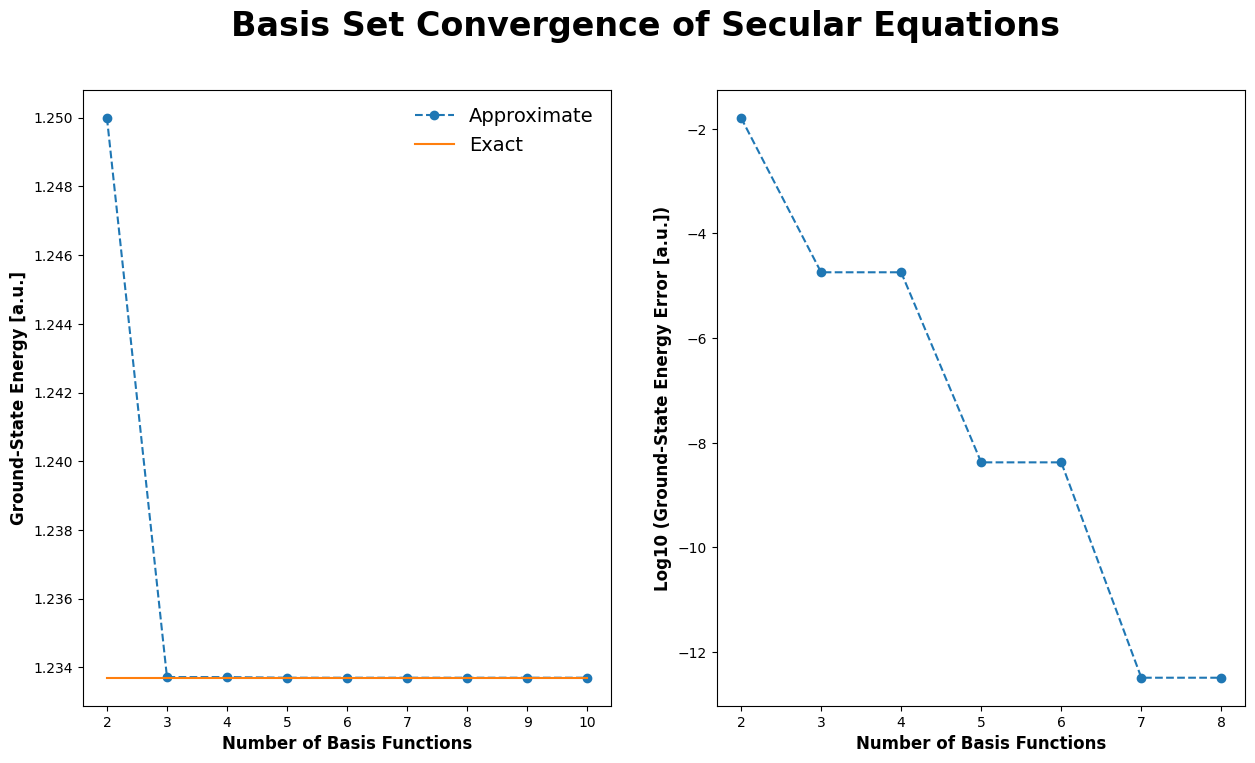

# plot basis set convergence of Secular equations

# -----------------------------------------------

# evaluate energy for a range of basis functions

n_values = np.arange(2, 11, 1)

e_values = np.array([compute_energy_ground_state(n) for n in n_values])

expected_energy = (1 * np.pi)**2 / 8.

plt.rcParams['figure.figsize'] = [15, 8]

fig, axes = plt.subplots(1, 2)

fig.suptitle("Basis Set Convergence of Secular Equations", fontsize=24, fontweight='bold')

for index, axis in enumerate(axes.ravel()):

if index == 0:

# plot approximate & exact energy

axis.plot(n_values, e_values, marker='o', linestyle='--', label='Approximate')

axis.plot(n_values, np.repeat(expected_energy, len(n_values)), marker='', linestyle='-', label='Exact')

# set axes labels

axis.set_xlabel("Number of Basis Functions", fontsize=12, fontweight='bold')

axis.set_ylabel("Ground-State Energy [a.u.]", fontsize=12, fontweight='bold')

axis.legend(frameon=False, fontsize=14)

else:

# plot log of approximate energy error (skip the last two values because they are zero)

axis.plot(n_values[:-2], np.log10(e_values[:-2] - expected_energy), marker='o', linestyle='--')

# set axes labels

axis.set_xlabel("Number of Basis Functions", fontsize=12, fontweight='bold')

axis.set_ylabel("Log10 (Ground-State Energy Error [a.u.])", fontsize=12, fontweight='bold')

plt.show()

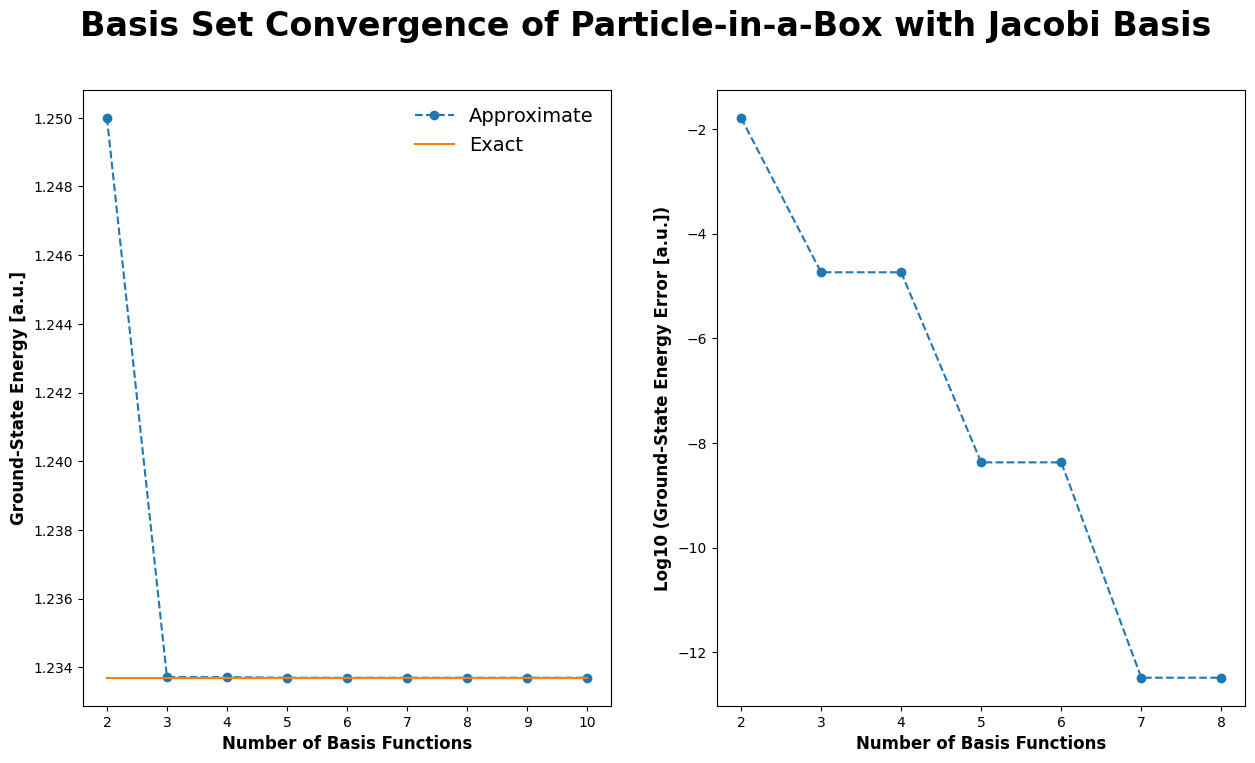

Particle-in-a-Box with Jacobi polynomials#

Similar results can be obtained with different basis functions. It is often convenient to use an orthonormal basis, where \(s_{jk} = \delta_{jk}\). For the particle-in-a-box with \(-1 \le x \le 1\), one such set of basis functions can be constructed from the (normalized) Jacobi polynomials,

where \(N_j\) is the normalization constant

To evaluate the Hamiltonian it is useful to know that:

The Hamiltonian matrix elements could be evaluated analytically, but the expression is pretty complicated. It’s easier to merely evaluate them numerically as:

import numpy as np

from scipy.linalg import eigh

from scipy.special import eval_jacobi

from scipy.integrate import quad

import matplotlib.pyplot as plt

def compute_energy_ground_state(n_basis):

"""Compute ground state energy for a particle-in-a-Box with Jacobi basis."""

def normalization(i):

return np.sqrt((2 * i + 5) * (i + 4) * (i + 3) / (32 * (i + 2) * (i + 1)))

def phi_squared(x, j):

return (normalization(j) * (1 - x) * (1 + x) * eval_jacobi(j, 2, 2, x))**2

def integrand(x, j, k):

term = -2 * eval_jacobi(j, 2, 2, x)

if j - 1 >= 0:

term -= 2 * x * (j + 5) * eval_jacobi(j - 1, 3, 3, x)

if j - 2 >= 0:

term += 0.25 * (1 - x**2) * (j + 5) * (j + 6) * eval_jacobi(j - 2, 4, 4, x)

return (1 - x) * (1 + x) * eval_jacobi(k, 2, 2, x) * term

# assign H to a zero matrix

h = np.zeros((n_basis, n_basis))

# compute H elements

for j in range(n_basis):

for k in range(n_basis):

integral = quad(integrand, -1.0, 1.0, args=(j, k))[0]

h[j, k] = -0.5 * normalization(j) * normalization(k) * integral

# solve Hc = Ec to get eigenvalues E

e_vals = eigh(h, None, eigvals_only=True)

return e_vals[0]

# plot basis set convergence of particle-in-a-Box with Jacobi basis

# -----------------------------------------------------------------

# evaluate energy for a range of basis functions

n_values = np.arange(2, 11, 1)

e_values = np.array([compute_energy_ground_state(n) for n in n_values])

expected_energy = (1 * np.pi)**2 / 8.

plt.rcParams['figure.figsize'] = [15, 8]

fig, axes = plt.subplots(1, 2)

fig.suptitle("Basis Set Convergence of Particle-in-a-Box with Jacobi Basis", fontsize=24, fontweight='bold')

for index, axis in enumerate(axes.ravel()):

if index == 0:

# plot approximate & exact energy

axis.plot(n_values, e_values, marker='o', linestyle='--', label='Approximate')

axis.plot(n_values, np.repeat(expected_energy, len(n_values)), marker='', linestyle='-', label='Exact')

# set axes labels

axis.set_xlabel("Number of Basis Functions", fontsize=12, fontweight='bold')

axis.set_ylabel("Ground-State Energy [a.u.]", fontsize=12, fontweight='bold')

axis.legend(frameon=False, fontsize=14)

else:

# plot log of approximate energy error (skip the last two values because they are zero)

axis.plot(n_values[:-2], np.log10(e_values[:-2] - expected_energy), marker='o', linestyle='--')

# set axes labels

axis.set_xlabel("Number of Basis Functions", fontsize=12, fontweight='bold')

axis.set_ylabel("Log10 (Ground-State Energy Error [a.u.])", fontsize=12, fontweight='bold')

plt.show()

🤔 Thought-Provoking Question: Why does adding odd-order polynomials to the basis set not increase the accuracy for the ground state wavefunction.#

Hint: The ground state wavefunction is an even function. A function is said to be even if it is symmetric about the origin, \(f(x) = f(-x)\). A function is said to be odd if it is antisymmetric around the origin, \(f(x) = - f(-x)\). Even-degree polynomials (e.g., \(1, x^2, x^4, \ldots\)) are even functions; odd-degree polynomials (e.g.; \(x, x^3, x^5, \ldots\)) are odd functions. \(\cos(ax)\) is an even function and \(\sin(ax)\) is an odd function. \(\cosh(ax)\) is an even function and \(\sinh(ax)\) is an odd function. In addition,

A linear combination of odd functions is also odd.

A linear combination of even functions is also even.

The product of two odd functions is even.

The product of two even functions is even.

The product of an odd and an even function is odd.

The integral of an odd function from \(-a\) to \(a\) is always zero.

The integral of an even function from \(-a\) to \(a\) is always twice the value of its integral from \(0\) to \(a\); it is also twice its integral from \(-a\) to \(0\).

The first derivative of an even function is odd.

The first derivative of an odd function is even.

The k-th derivative of an even function is odd if k is odd, and even if k is even.

The k-th derivative of an odd function is even if k is odd, and odd if k is even.

These properties of odd and even functions are often very useful. In particular, the first and second properties indicate that if you know that the exact wavefunction you are looking for is odd (or even), it will be a linear combination of basis functions that are odd (or even). E.g., odd basis functions are useless for approximating even eigenfunctions.

🤔 Thought-Provoking Question: Why does one get exactly the same results for the Jacobi polynomials and the simpler \((1-x)(1+x)x^k\) polynomials?#

Hint: Can you rewrite one set of polynomials as a linear combination of the others?

Perturbation Theory#

It is not uncommon that a Hamiltonian for which the Schrödinger equation is difficult to solve is “close” to another Hamiltonian that is easier to solve. In such cases, one can attempt to solve the easier problem, then perturb the system towards the actual, more difficult to solve, system of interest. The idea of leveraging easy problems to solve difficult problems is the essence of perturbation theory.

The Perturbed Hamiltonian#

Suppose that for some Hamiltonian, \(\hat{H}\), we know the eigenfunctions and eigenvalues,

However, we are not interested in this Hamiltonian, but a different Hamiltonian, \(\tilde{H}\), which we can write as:

where obviously

Let us now define a family of perturbed Hamiltonians,

where obviously:

Writing the Schrödinger equation for \(\hat{H}_\lambda\), we have:

This equation holds true for all values of \(\lambda\). Since we know the answer for \(\lambda = 0\), and we assume that the perturbed system described by \(\tilde{H}\) is close enough to \(\hat{H}\) for the solution at \(\lambda =0\) to be useful, we will write the expand the energy and wavefunction as Taylor-MacLaurin series

When we write this, we are implicitly assuming that the derivatives all exist, which is not true if the zeroth-order state is degenerate (unless the perturbation does not break the degeneracy).

If we insert these expressions into the Schrödinger equation for \(\hat{H}(\lambda)\), we obtain a polynomial of the form:

This equation can only be satisfied for all \(\lambda\) if all its terms are zero, so

The key equations that need to be solved are listed below. First there is the zeroth-order equation, which is automatically satisfied:

The first-order equation is:

The second-order equation is:

Higher-order equations are increasingly complicated, but still tractable in some cases. One usually applies perturbation theory only when the perturbation is relatively small, which usually suffices to ensure that the Taylor series expansion converges rapidly and higher-order terms are relatively insignificant.

Hellmann-Feynman Theorem#

The Hellmann-Feynman theorem has been discovered many times, most impressively by Richard Feynman, who included it in his undergraduate senior thesis. In simple terms:

Hellmann-Feynman Theorem: Suppose that the Hamiltonian, \(\hat{H}(\lambda)\) depends on a parameter. Then the first-order change in the energy with respect to the parameter is given by the equation,

Derivation of the Hellmann-Feynman Theorem by Differentiation Under the Integral Sign#

The usual way to derive the Hellmann-Feynman theorem uses the technique of differentiation under the integral sign. Therefore,

While such an operation is not always mathematically permissible, it is usually permissible, as should be clear from the definition of the derivative as a limit of a difference,

and the fact that the integral of a sum is the sum of the integrals. Using the product rule for derivatives, one obtains:

In the third-from-last line we used the eigenvalue relation and the Hermitian property of the Hamiltonian; in the last step we have used the fact that the wavefunctions are normalized and the fact that the derivative of a constant is zero to infer that the terms involving the wavefunction derivatives vanish. Specifically, we used

Derivation of the Hellmann-Feynman Theorem from First-Order Perturbation Theory#

Starting with the equation from first-order perturbation theory,

multiply on the left-hand-side by \(\langle \psi_k(0) |\). (I.e., multiply by \(\psi_k(0;x)^*\) and integrate.) Then:

Because the Hamiltonian is Hermitian, the first term is zero. The second term can be rearranged to give the Hellmann-Feynman theorem,

Perturbed Wavefunctions#

To determine the change in the wavefunction,

it is helpful to adopt the convention of intermediate normalization, whereby

for all \(\lambda\). Inserting the series expansion for \(|\psi(\lambda) \rangle\) one finds that

where in the second line we have used the normalization of the zeroth-order wavefunction, \(\langle \psi_k(0) | \psi_k(0) \rangle = 1\). Since this equation holds for all \(\lambda\), it must be that

Because the eigenfunctions of \(\hat{H}(0)\) are a complete basis, we can expand \( | \psi_k'(0) \rangle\) as:

but because \(\langle \psi_k(0) | \psi_k'(0) \rangle=0\), it must be that \(c_k = 0\). So:

We insert this expansion into the expression from first-order perturbation theory:

and multiply on the left by \(\langle \psi_l(0) |\), with \(l \ne k\).

Assuming that the k-th state is nondegenerate (so that we can safely divide by \( E_l(0) - E_k(0)\)),

and so:

Higher-order terms can be determined in a similar way, but we will only deduce the expression for the second-order energy change. Using the second-order terms from the perturbation expansion,

Projecting this expression against \(\langle \psi_k(0) |\), one has:

To obtain the last line we used the intermediate normalization of the perturbed wavefunction, \(\langle \psi_k(0) | \psi_k'(0) \rangle = 0\). Rewriting the expression for the second-order change in the energy, and then inserting the expression for the first-order wavefunction, gives

Notice that for the ground state (\(k=0\)), where \(E_0 - E_{j>0} < 0\), the second-order energy change is never positive, \( E_0''(0) \le 0\).

The Law of Diminishing Returns and Accelerating Losses#

Suppose one is given a Hamiltonian that is parameterized in the general form used in perturbation theory,

According to the Hellmann-Feynman theorem, I have:

Consider two distinct values for the perturbation parameter, \(\lambda_1 < \lambda_2\). According to the variational principle, if one evaluates the expectation value of \(\hat{H}(\lambda_1)\) with \(\psi(\lambda_2)\) one will obtain an energy above the true ground-state energy. I.e.,

Or, more explicitly,

Similarly, the energy expectation value \(\hat{H}(\lambda_2)\) evaluated with \(\psi(\lambda_1)\) is above the true ground-state energy, so

Adding these two inequalities and cancelling out the factors of \(\langle \psi(\lambda_2) | \hat{H}(0) |\psi(\lambda_2) \rangle \) that appear on both sides of the inequality, one finds that:

or, using the Hellmann-Feynman theorem (in reverse),

Recall that \(\lambda_2 > \lambda_1\). Thus \(E_0'(\lambda_2) < E_0'(\lambda_1)\). If the system is losing energy at \(\lambda_1\) (i.e., \(E'(\lambda_1) < 0\)), then at \(\lambda_2\) the system is losing energy even faster (\(E_0'(\lambda_2)\) is more negative than \(E_0'(\lambda_1)\). This is the law of accelerating losses. If the system is gaining energy a \(\lambda_1\) (i.e., \(E_0'(\lambda_1) > 0\)), then at \(\lambda_2\) the system is gaining energy more slowly (or even losing energy) (\(E_0'(\lambda_2)\) is smaller than \(E_0'(\lambda_1)\)). This is the law of diminishing returns.

If the energy is a twice-differentiable function of \(\lambda\), then one can infer that the second derivative of the energy is always negative

Example: Particle in a Box with a Sloped Bottom#

The Hamiltonian for an Applied Uniform Electric Field#

When a system cannot be solved exactly, one can solve it approximately using

perturbation theory.

variational methods using either an explicit wavefunction form or basis-set expansion.

To exemplify these approaches, we will use the particle-in-a-box with a sloped bottom. This is obtained when an external electric field is applied to a charged particle in the box. The force on the charged particle due to the field is

so for an electron in a box on which an electric field of magnitude \(F\) is applied in the \(+x\) direction, the force is

where \(e\) is the magnitude of the charge on the electron. The potential is

Assuming that the potential is zero at the origin for convenience, \(V(0) = 0\), the potential is thus:

The particle in a box with an applied field has the Hamiltonian

or, in atomic units,

For simplicity, we assume the case where the box has length 2 and is centered at the origin,

For small electric fields, we can envision solving this system by perturbation theory. We also expect that variational approaches can work well. We’ll explore how these strategies can work. It turns out, however, that this system can be solved exactly, though the treatment is far beyond the scope of this course. There are a few useful equations, however: for a field strength of \(F=\tfrac{1}{16}\) the ground-state energy is 1.23356 a.u. and for a field strength of \(F=\tfrac{25}{8}\) the ground-state energy is 0.9063 a.u.; these can be compared to the unperturbed result of 1.23370 a.u.. Some approximate formulas for the higher eigenvalues are available:

Note, to obtain these numbers from the reference data in solved exactly, you need to keep in mind that the reference data assumes the mass is \(1/2\) instead of \(1\), and that the reference data is for a box from \(0 \le x \le 1\) instead of \(-1 \le x \le 1\). This requires dividing the reference field by 16, and shifting the energies by the field, and dividing the energy by 8 (because both the length of the box and the mass of the particle has doubled). In the end, \(F = \tfrac{1}{16} F_{\text{ref}}\) and \(E = \tfrac{1}{8}E_{\text{ref}}-\tfrac{1}{16}F_{\text{ref}}= \tfrac{1}{8}E_{\text{ref}}-F\).

Perturbation Theory for the Particle-in-a-Box in a Uniform Electric Field#

The First-Order Energy Correction is Always Zero#

The corrections due to the perturbation are all zero to first order. To see this, consider that, from the Hellmann-Feynman theorem,

This reflects the fact that this system has a vanishing dipole moment.

The First-Order Correction to the Wavefunction#

To determine the first-order correction to the wavefunction, one needs to evaluate integrals that look like:

From the properties of odd and even functions, and the fact that \(\psi_n(x)\) is odd if \(n\) is even, and vice versa, it’s clear that \(V_mn = 0\) unless \(m+n\) is odd. (That is, either \(m\) or \(n\), but not both, must be odd.) The integrals we need to evaluate all have the form

where \(m\) is even and \(n\) is odd. Using the trigonometric identity

we can deduce that the integral is

As mentioned before, this integral is zero unless \(m+n\) is odd. The cosine terms therefore vanish. For odd \(p\), \(\sin \tfrac{p \pi}{2} = -1^{(p-1)/2}\), we have

The first-order corrections to the ground-state wavefunction is then:

The Second-Order Correction to the Energy#

The second-order correction to the energy is

This infinite sum is not trivial to evaluate, but we can investigate the first non-vanishing term for the ground state. (This is the so-called Unsold approximation, and it’s based on the fact that the largest contribution to the sum probably comes from the term with the smallest denominator (corresponding to the closest-energy state to the reference state).) Thus:

Using this, we can estimate the ground-state energy for different field strengths as

For the field strengths for which we have exact results readily available, this gives

These results are impressively accurate, especially considering all the effects we have neglected.

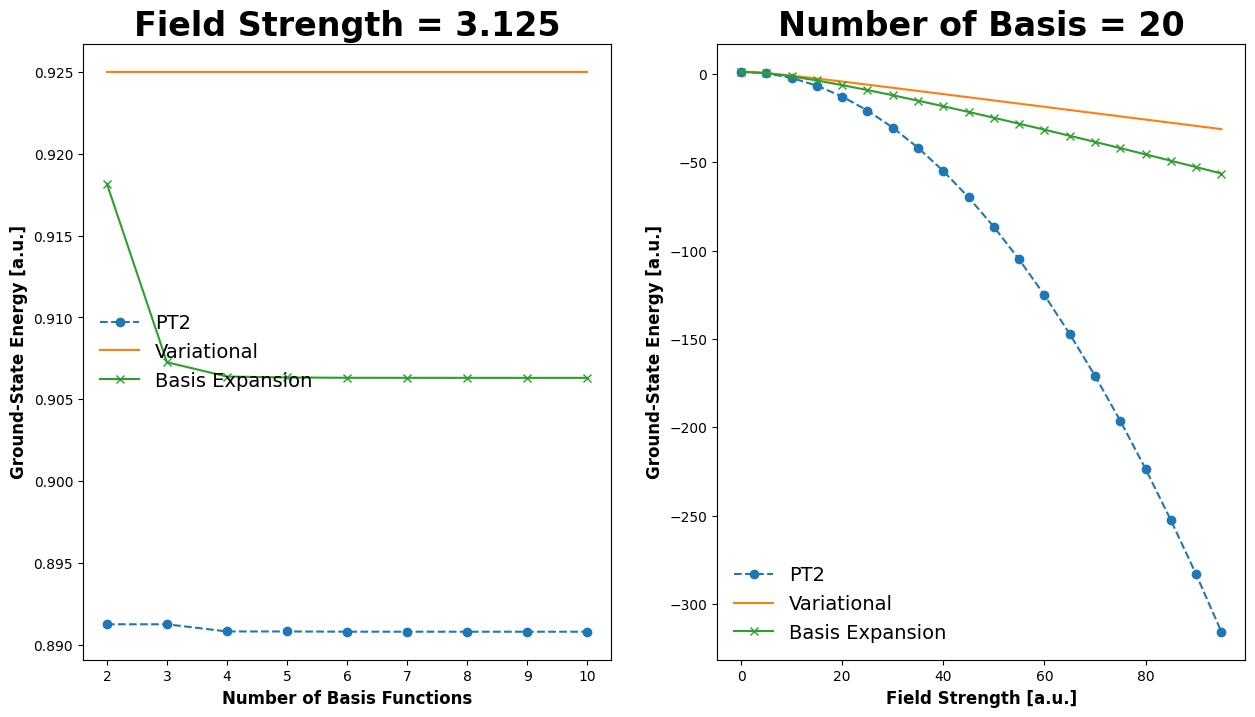

Variational Approach to the Particle-in-a-Box in a Uniform Electric Field#

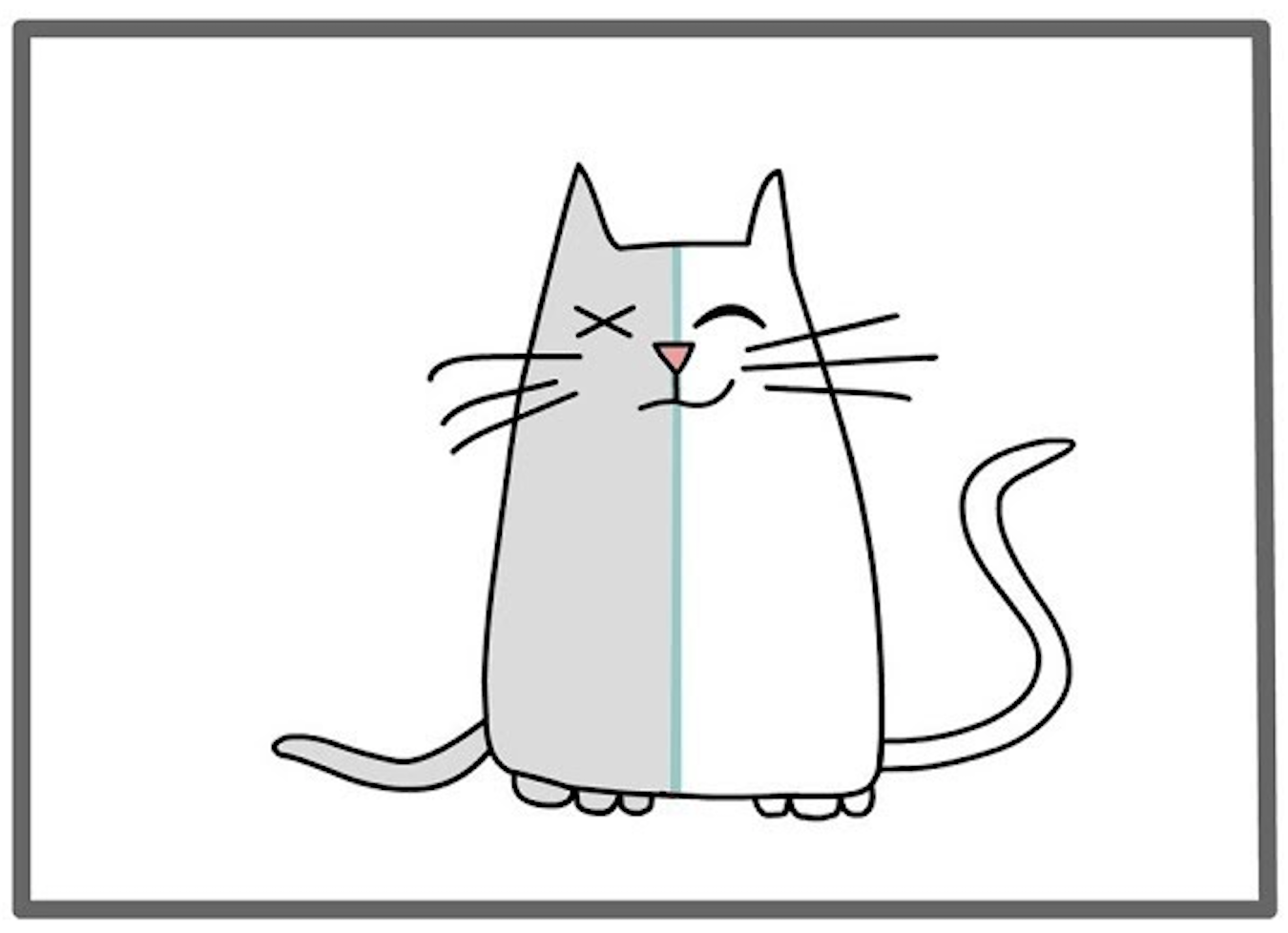

When the field is applied, it becomes more favorable for the electron to drift to the \(x<0\) side of the box. To accomodate this, we can propose a wavefunction ansatz for the ground state,

Clearly \(c = 0\) in the absence of a field, but \(c > 0\) is to be expected when the field is applied. We can determine the optimal value of \(c\) using the variational principle. First we need to determine the energy as a function of \(c\):

The denominator of this expression is easily evaluated

where we have defined the constant:

The numerator is

where we have used the integral:

This equation can be solved analytically because it is a cubic equation, but it is more convenient to solve it numerically.

Basis-Set Expansion for the Particle-in-a-Box in a Uniform Electric Field#

As a final approach to this problem, we can expand the wavefunction in a basis set. The eigenfunctions of the unperturbed particle-in-a-box are a sensible choice here, though we could use polynomials (as we did earlier in this worksheet) without issue if one wished to do so. The eigenfunctions of the unperturbed problem are orthonormal, so the overlap matrix is the identity matrix

and the Hamiltonian matrix elements are

Using the results we have already determined for the matrix elements, then,

Demonstration#

In the following code block, we’ll demonstrate how the energy converges as we increase the number of terms in our calculation. For the excited states, it seems the reference data is likely erroneous.

import numpy as np

from scipy.linalg import eigh

from scipy.optimize import minimize_scalar

from scipy.integrate import quad

import matplotlib.pyplot as plt

def compute_V(n_basis):

"""Compute the matrix <k|x|l> for an electron in a box from -1 to 1 in a unit external field, in a.u."""

# initialize V to a zero matrix

V = np.zeros((n_basis,n_basis))

# Because Python is zero-indexed, our V matrix will be shifted by 1. I'll

# make this explicit by making the counters km1 and lm1 (k minus 1 and l minus 1)

for km1 in range(n_basis):

for lm1 in range(n_basis):

if (km1 + lm1) % 2 == 1:

# The matrix element is zero unless (km1 + lm1) is odd, which means that (km1 + lm1) mod 2 = 1.

# Either km1 is even or km1 is odd. If km1 is odd, then the km1 corresponds to a sine and

# lm1 is even, and corresponds to a cosine. If km1 is even and lm1 is odd, then the roles of the

# sine and cosine are reversed, and one needs to multiply the first term below by -1. The

# factor -1**lm1 achieves this switching.

V[km1,lm1] = 4. / np.pi**2 * (-1**((km1 - lm1 - 1)/2) / (km1-lm1)**2 * -1**(lm1)

+ -1**((km1 + lm1 - 1)/2) / (km1+lm1+2)**2)

return V

def energy_pt2(k,F,n_basis):

"""kth excited state energy in a.u. for an electron in a box of length 2 in the field F estimated with 2nd-order PT.

Parameters

----------

k : scalar, int

k = 0 is the ground state and k = 1 is the first excite state.

F : scalar

the external field strength

n_basis : scalar, int

the number of terms to include in the sum over states in the second order pert. th. correction.

Returns

-------

energy_pt2 : scalar

The estimated energy of the kth-excited state of the particle in a box of length 2 in field F.

"""

# It makes no sense for n_basis to be less than k.

assert(k < n_basis), "The excitation level of interest should be smaller than n_basis"

# Energy of the kth-excited state in a.u.

energy = (np.pi**2 / 8.) * np.array([(k + 1)**2 for k in range(n_basis)])

V = compute_V(n_basis)

der2 = 0

for j in range(n_basis):

if j != k:

der2 += 2*V[j,k]**2/(energy[k]-energy[j])

return energy[k] + der2 * F**2 / 2

def energy_variational(F):

"""ground state energy for a electron0in-a-Box in a box of length 2 in the field F estimated with the var. principle.

The variational wavefunction ansatz is psi(x) = (1+cx)cos(pi*x/2) where c is a variational parameter.

"""

gamma = 1/3 - 2/np.pi**2

def func(c):

return (np.pi**2/8*(1+gamma*c**2)+c**2/2 - 2*c*gamma*F) / ( 1 + gamma * c**2)

res = minimize_scalar(func,(0,1))

return res.fun

def energy_basis(F,n_basis):

"""Eigenenergies in a.u. of an electron in a box of length 2 in the field F estimated by basis-set expansion.

n_basis basis functions from the F=0 case are used.

Parameters

----------

F : scalar

the external field strength

n_basis : scalar, int

the number of terms to include in the sum over states in the second order pert. th. correction.

Returns

-------

energy_basis_exp : array_like

list of n_basis eigenenergies

"""

energy = (np.pi**2 / 8.) * np.array([(k + 1)**2 for k in range(n_basis)])

V = compute_V(n_basis)

# assign Hamiltonian to the potential matrix, times the field strength:

h = F*V

np.fill_diagonal(h,energy)

# solve Hc = Ec to get eigenvalues E

e_vals = eigh(h, None, eigvals_only=True)

return e_vals

print("Energy of these models vs. reference values:")

print("Energy of the unperturbed ground state (field = 0):", np.pi**2 / 8.)

print(" ")

print("Field value: ", 1./16)

print("Exact Energy of the ground state:", 10.3685/8 - 1./16)

print("Energy of the ground state estimated with 2nd-order perturbation theory:", energy_pt2(0,1./16,50))

print("Energy of the ground state estimated with the variational principle:", energy_variational(1./16))

print("Energy of the ground state estimated with basis set expansion:", energy_basis(1./16,50)[0])

print(" ")

print("Field value: ", 25./4)

print("Exact Energy of the ground state:", 32.2505/8 - 25./8)

print("Energy of the ground state estimated with 2nd-order perturbation theory:", energy_pt2(0,25./8,50))

print("Energy of the ground state estimated with the variational principle:", energy_variational(25./8))

print("Energy of the ground state estimated with basis set expansion:", energy_basis(25./8,50)[0])

print(" ")

print(" ")

print("Energy of the unperturbed first excited state (field = 0):", np.pi**2 * 2**2 / 8.)

print(" ")

print("Field value: ", 1./16)

print("Exact Energy of the first excited state:", 39.9787/8 - 1./16)

print("Energy of the first excited state estimated with 2nd-order perturbation theory:", energy_pt2(1,1./16,50))

print("Energy of the first excited state estimated with basis set expansion:", energy_basis(1./16,50)[1])

print(" ")

print("Field value: ", 25./4)

print("Exact Energy of the first excited state:", 65.177/8 - 25./8)

print("Energy of the first excited state estimated with 2nd-order perturbation theory:", energy_pt2(1,25./4,50))

print("Energy of the first excited state estimated with basis set expansion:", energy_basis(25./4,50)[1])

print(" ")

print(" ")

print("Energy of the unperturbed second excited state (field = 0):", np.pi**2 * 3**2 / 8.)

print(" ")

print("Field value: ", 1./16)

print("Exact Energy of the second excited state:", 89.3266/8 - 1./16)

print("Energy of the second excited state estimated with 2nd-order perturbation theory:", energy_pt2(2,1./16,50))

print("Energy of the first excited state estimated with basis set expansion:", energy_basis(1./16,50)[2])

print(" ")

print("Field value: ", 25./4)

print("Exact Energy of the ground state:", 114.309/8 - 25./8)

print("Energy of the second excited state estimated with 2nd-order perturbation theory:", energy_pt2(2,25./4,50))

print("Energy of the second excited state estimated with basis set expansion:", energy_basis(25./4,50)[2])

Energy of these models vs. reference values:

Energy of the unperturbed ground state (field = 0): 1.2337005501361697

Field value: 0.0625

Exact Energy of the ground state: 1.2335625

Energy of the ground state estimated with 2nd-order perturbation theory: 1.2335633924534153

Energy of the ground state estimated with the variational principle: 1.2335671162852282

Energy of the ground state estimated with basis set expansion: 1.2335633952295426

Field value: 6.25

Exact Energy of the ground state: 0.9063125000000003

Energy of the ground state estimated with 2nd-order perturbation theory: 0.8908063432502749

Energy of the ground state estimated with the variational principle: 0.9250111494073084

Energy of the ground state estimated with basis set expansion: 0.9063121281470085

Energy of the unperturbed first excited state (field = 0): 4.934802200544679

Field value: 0.0625

Exact Energy of the first excited state: 4.9348375

Energy of the first excited state estimated with 2nd-order perturbation theory: 4.93484310142371

Energy of the first excited state estimated with basis set expansion: 4.934843098735421

Field value: 6.25

Exact Energy of the first excited state: 5.022125000000001

Energy of the first excited state estimated with 2nd-order perturbation theory: 5.343810990856903

Energy of the first excited state estimated with basis set expansion: 5.1618507952006745

Energy of the unperturbed second excited state (field = 0): 11.103304951225528

Field value: 0.0625

Exact Energy of the second excited state: 11.103325

Energy of the second excited state estimated with 2nd-order perturbation theory: 11.103329317896025

Energy of the first excited state estimated with basis set expansion: 11.103329317810104

Field value: 6.25

Exact Energy of the ground state: 11.163625

Energy of the second excited state estimated with 2nd-order perturbation theory: 11.34697165619853

Energy of the second excited state estimated with basis set expansion: 11.33691393380618

# user-specified parameters

F = 25.0 / 8

nbasis = 20

# plot basis set convergence of energy estimates at a given field

# ---------------------------------------------------------------

# evaluate energy for a range of basis functions at a given field

n_values = np.arange(2, 11, 1)

e_pt2_basis = np.array([energy_pt2(0, F, n) for n in n_values])

e_var_basis = np.repeat(energy_variational(F), len(n_values))

e_exp_basis = np.array([energy_basis(F, n)[0] for n in n_values])

# evaluate energy for a range of fields at a given basis

f_values = np.arange(0.0, 100., 5.)

e_pt2_field = np.array([energy_pt2(0, f, nbasis) for f in f_values])

e_var_field = np.array([energy_variational(f) for f in f_values])

e_exp_field = np.array([energy_basis(f, nbasis)[0] for f in f_values])

plt.rcParams['figure.figsize'] = [15, 8]

fig, axes = plt.subplots(1, 2)

# fig.suptitle("Basis Set Convergence of Particle-in-a-Box with Jacobi Basis", fontsize=24, fontweight='bold')

for index, axis in enumerate(axes.ravel()):

if index == 0:

# plot approximate energy at a fixed field

axis.plot(n_values, e_pt2_basis, marker='o', linestyle='--', label='PT2')

axis.plot(n_values, e_var_basis, marker='', linestyle='-', label='Variational')

axis.plot(n_values, e_exp_basis, marker='x', linestyle='-', label='Basis Expansion')

# set axes labels

axis.set_xlabel("Number of Basis Functions", fontsize=12, fontweight='bold')

axis.set_ylabel("Ground-State Energy [a.u.]", fontsize=12, fontweight='bold')

axis.set_title(f"Field Strength = {F}", fontsize=24, fontweight='bold')

axis.legend(frameon=False, fontsize=14)

else:

# plot approximate energy at a fixed basis

axis.plot(f_values, e_pt2_field, marker='o', linestyle='--', label='PT2')

axis.plot(f_values, e_var_field, marker='', linestyle='-', label='Variational')

axis.plot(f_values, e_exp_field, marker='x', linestyle='-', label='Basis Expansion')

# set axes labels

axis.set_xlabel("Field Strength [a.u.]", fontsize=12, fontweight='bold')

axis.set_ylabel("Ground-State Energy [a.u.]", fontsize=12, fontweight='bold')

axis.set_title(f"Number of Basis = {nbasis}", fontsize=24, fontweight='bold')

axis.legend(frameon=False, fontsize=14)

plt.show()

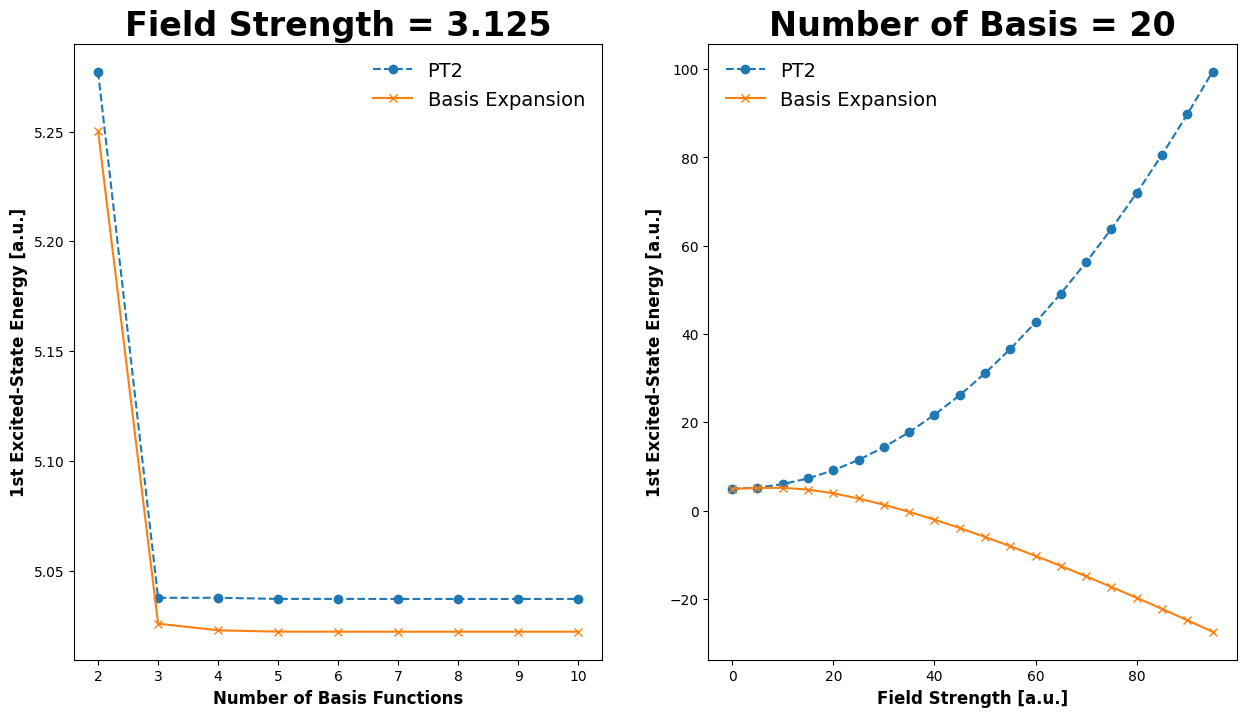

# user-specified parameters

F = 25.0 / 8

nbasis = 20

# plot basis set convergence of 1st excited state energy at a given field

# ---------------------------------------------------------------

# evaluate energy for a range of basis functions at a given field

n_values = np.arange(2, 11, 1)

e_pt2_basis = np.array([energy_pt2(1, F, n) for n in n_values])

e_exp_basis = np.array([energy_basis(F, n)[1] for n in n_values])

# evaluate energy for a range of fields at a given basis

f_values = np.arange(0.0, 100., 5.)

e_pt2_field = np.array([energy_pt2(1, f, nbasis) for f in f_values])

e_exp_field = np.array([energy_basis(f, nbasis)[1] for f in f_values])

plt.rcParams['figure.figsize'] = [15, 8]

fig, axes = plt.subplots(1, 2)

# fig.suptitle("Basis Set Convergence of Particle-in-a-Box with Jacobi Basis", fontsize=24, fontweight='bold')

for index, axis in enumerate(axes.ravel()):

if index == 0:

# plot approximate energy at a fixed field

axis.plot(n_values, e_pt2_basis, marker='o', linestyle='--', label='PT2')

axis.plot(n_values, e_exp_basis, marker='x', linestyle='-', label='Basis Expansion')

# set axes labels

axis.set_xlabel("Number of Basis Functions", fontsize=12, fontweight='bold')

axis.set_ylabel("1st Excited-State Energy [a.u.]", fontsize=12, fontweight='bold')

axis.set_title(f"Field Strength = {F}", fontsize=24, fontweight='bold')

axis.legend(frameon=False, fontsize=14)

else:

# plot approximate energy at a fixed basis

axis.plot(f_values, e_pt2_field, marker='o', linestyle='--', label='PT2')

axis.plot(f_values, e_exp_field, marker='x', linestyle='-', label='Basis Expansion')

# set axes labels

axis.set_xlabel("Field Strength [a.u.]", fontsize=12, fontweight='bold')

axis.set_ylabel("1st Excited-State Energy [a.u.]", fontsize=12, fontweight='bold')

axis.set_title(f"Number of Basis = {nbasis}", fontsize=24, fontweight='bold')

axis.legend(frameon=False, fontsize=14)

plt.show()

🪞 Self-Reflection#

When is a basis set appropriate? When is perturbation theory more appropriate?

Consider the hydrogen molecule ion, \(\text{H}_2^+\). Is it more sensible to use the secular equation (basis-set-expansion) or perturbation theory? What if the bond length is very small? What if the bond length is very large?

🤔 Thought-Provoking Questions#

Show that if you minimize the energy as a function of the basis-set coefficients using the variational principle, then you obtain the secular equation.

If a uniform external electric field of magnitude \(F\) in the \(\hat{\mathbf{u}} = [\hat{u}_x,\hat{u}_y,\hat{u}_z]^T\) direction is applied to a particle with charge \(q\), the potential \(V(x,y,z) = -qF(u_x x + u_y y + u_z z)\) is added to the Hamiltonian. (This follows from the fact that the force applied to the particles is proportional to the electric field, \(\text{force} = q \vec{E} = q F \hat{\mathbf{u}}\) and the force is \(\text{force} = - \nabla V(x,y,z)\). If the field is weak, then perturbation theory can be used, and the energy can be written as a Taylor series. The coefficients of the Taylor series give the dipole moment (\(\mu\)), dipole polarizability (\(\alpha\)), first dipole hyperpolarizability (\(\beta\)), second dipole hyperpolarizability (\(\gamma\)) in the \(\hat{\mathbf{u}}\) direction.

The dipole moment, \(\mu\), of any spherical system is zero. Explain why.

The polarizability, \(\alpha\), of any system is always positive. Explain why.

The Hellmann-Feynman theorem indicates that given the ground-state wavefunction for a molecule, the force on the nuclei can be obtained. Explain how.

What does it mean that perturbation theory is inaccurate when the perturbation is large?

Can you explain why the energy goes down when the electron-in-a-box is placed in an external field?

For a sufficiently-highly excited state of the particle-in-a-box, the effect of an external electric field is negligible. Why is this true intuitively? Can you show it graphically? Can you explain it mathematically?

🔁 Recapitulation#

What is the secular equation?

What is the Hellmann-Feynman theorem?

How is the Hellmann-Feynman theorem related to perturbation theory?

What is perturbation theory? What is the expression for the first-order perturbed wavefunction?

🔮 Next Up…#

Multielectron systems

Approximate methods for multielectron systems.

📚 References#

My favorite sources for this material are:

D. A. MacQuarrie, Quantum Chemistry (University Science Books, Mill Valley California, 1983)

There are also some excellent wikipedia articles: